Lyra2

Lyra2 is a key derivation function (KDF), also called password hashing scheme (PHS), that received a special recognition during the Password Hashing Competition in July 2015. It was designed by Marcos A. Simplicio Jr., Leonardo C. Almeida, Ewerton R. Andrade, Paulo C. F. dos Santos, and Paulo S. L. M. Barreto from Escola Politécnica da Universidade de São Paulo.

Contents

Review of Lyra2 Algorithm

Lyra2 is an improvement of Lyra, previously proposed by the same authors. Lyra2 preserves the security, efficiency and flexibility of its predecessor, including: the ability to configure the desired amount of memory, processing time and parallelism to be used by the algorithm and the capacity of providing a high memory usage with a processing time similar to that obtained with Scrypt. In addition, it brings important improvements when compared to its predecessor:

- it allows a higher security level against attack venues involving time-memory trade-offs;

- it allows legitimate users to benefit more effectively from the parallelism capabilities of their own platforms;

- it includes tweaks for increasing the costs involved in the construction of dedicated hardware to attack the algorithm;

- it balances resistance against side-channel threats and attacks relying on cheaper (and, hence, slower) storage devices.

Lyra2 is released under a public domain, and provides two main extensions:

- Lyra2-δ, gives the user better control over the algorithm’s bandwidth usage;

- Lyra2p, takes advantage of parallelism capabilities on the legitimate user’s platform.

This algorithm enables parameterization in terms of:

- execution time (time cost

);

); - memory required (number of rows

, and number of columns

, and number of columns  );

); - degree of parallelism (number of threads

);

); - underlying permutation function (can be seen as the main cryptographic primitive);

- number of blocks used by the underlying permutation function (bitrate);

- number of rounds performed for the underlying permutation function (

);

); - number of bits to be used in rotations (

);

); - output length (

).

).

Lyra2 algorithm is used in mining as a part of other modern algorithms like Lyra2Rev2. So, there are mining pools and coins that work on Lyra2’s modern versions.

Lyra2’s Strengths

- High resistance against processing-memory tradeoffs: estimated processing costs of attacks with low memory usage involve a factor that grows exponentially with time cost due to recomputations

- Memory and time costs can be decoupled, allowing the resources’ usage to be fine-tuned

- Fast due to use of reduced-round sponge function in the algorithm’s core

- Can provide outputs of any desired length, behaving as a Key Derivation Function (KDF)

- Design combines resistance to side-channel attacks (during the whole Setup phase) and to attacks involving cheap (hence, low-speed) memory devices, balancing these conflicting requirements

- Considers a wider range of configurations for protecting against attacking platforms while optimizing execution on legitimate platform, such as:

- Support for parallelism, for multicore platforms, without giving much advantage to GPU-based attacks

- Capability of using different underlying sponge functions depending on the target platform (e.g., Blake2b for software implementations; Keccak for hardware implementations; BlaMka for additional resistance against hardware platforms; etc.)

- Ability to raise the algorithm’s memory bandwidth usage (note: the original specification should already max out the bandwidth in current machines, but feature may be useful for future hardware)

Design

As any PHS, Lyra2 takes as input a salt and a password, creating a pseudorandom output that can then be used as key material for cryptographic algorithms or as an authentication string.

Internally, the scheme’s memory is organized as a matrix that is expected to remain in memory during the whole password hashing process: since its cells are iteratively read and written, discarding a cell for saving memory leads to the need of recomputing it whenever it is accessed once again, until the point it was last modified.

The construction and visitation of the matrix is done using a stateful combination of the absorbing, squeezing and duplexing operations of the underlying sponge (i.e., its internal state is never reset to zero), ensuring the sequential nature of the whole process.

Also, the number of times the matrix’s cells are revisited after initialization is defined by the user, allowing Lyra2’s execution time to be fine-tuned according to the target platform’s resources.# *** Functions/symbols *** # || Concatenate two strings # ^ Bitwise XOR # [+] Wordwise add operation (i.e., ignoring carries between words) # % Modulus # W The target machine's word size (usually, 32 or 64) # omega Number of bits to be used in rotations (recommended: a multiple of the machine's word size, W) # >>> Right rotation # rho Number of rounds for reduced squeeze or duplexing operations # blen Sponge's block size in bytes # H or H_i Sponge with block size blen (in bytes) and underlying permutation f # H.absorb(input) Sponge's absorb operation on input # H.squeeze(len) Sponge's squeeze operation of len bytes # H.squeeze_{rho}(len) Sponge's squeeze operation of len bytes using rho rounds of f # H.duplexing(input,len) Sponge's duplexing operation on input, producing len bytes # H.duplexing_{rho}(input,len) Sponge's duplexing operation on input, using rho rounds of f, producing len bytes # pad(string) Pads a string to a multiple of blen bytes (padding rule: 10*1) # lsw(input) The least significant word of input # len(string) Length of a string, in bytes # syncThreads() Synchronize parallel threads # swap(input1,input2) Swap the value of two inputs # C Number of columns on the memory matrix (usually, 64, 128, 256, 512 or 1024) # P Degree of parallelism (P >= 1 and (m_cost/2) % P = 0) # *** Inputs *** # password # salt # t_cost # m_cost # outlen # *** Algorithm without parallelism *** # ** Bootstrapping phase: Initializes the sponge's state and local variables # Byte representation of input parameters (others can be added) params = outlen || len(password) || len(salt) || t_cost || m_cost || C # Initializes the sponge's state (after that, password can be overwritten) H.absorb( pad(password || salt || params) ) # Initializes visitation step, window and first rows that will feed gap = 1 stp = 1 wnd = 2 sqrt = 2 prev0 = 2 row1 = 1 prev1 = 0 # ** Setup phase: Initializes a (m_cost x C) memory matrix, it's cells having blen-byte cells # Initializes M[0], M[1] and M[2] for col = 0 to C-1 M[0][C-1-col] = H.squeeze_{rho}(blen) for col = 0 to C-1 M[1][C-1-col] = H.duplexing_{rho}( M[0][col], blen) for col = 0 to C-1 M[2][C-1-col] = H.duplexing_{rho}( M[1][col], blen) # Filling Loop: initializes remainder rows for row0 = 3 to m_cost-1 # Columns Loop: M[row0] is initialized and M[row1] is updated for col = 0 to C-1 rand = H.duplexing_{rho}( M[row1][col] [+] M[prev0][col] [+] M[prev1][col], blen) M[row0][C-1-col] = M[prev0][col] ^ rand M[row1][col] = M[row1][col] ^ ( rand >>> omega ) # Rows to be revisited in next loop prev0 = row0 prev1 = row1 row1 = (row1 + stp) % wnd # Window fully revisited if (row1 = 0) # Doubles window and adjusts step wnd = 2 * wnd stp = sqrt + gap gap = -gap # Doubles sqrt every other iteration if (gap = -1) sqrt = 2 * sqrt # ** Wandering phase: Iteratively overwrites pseudorandom cells of the memory matrix # Visitation Loop: (2 * m_cost * t_cost) rows revisited in pseudorandom fashion for wCount = 0 to ( (m_cost * t_cost) - 1) # Picks pseudorandom rows row0 = lsw(rand) % m_cost row1 = lsw( rand >>> omega ) % m_cost # Columns Loop: updates both M[row0] and M[row1] for col = 0 to C-1 # Picks pseudorandom columns col0 = lsw( ( rand >>> omega ) >>> omega ) % C col1 = lsw( ( ( rand >>> omega ) >>> omega ) >>> omega ) % C rand = H.duplexing_{rho}( M[row0][col] [+] M[row1][col] [+] M[prev0][col0] [+] M[prev1][col1], blen) M[row0][col] = M[row0][col] ^ rand M[row1][col] = M[row1][col] ^ ( rand >>> omega ) # Next iteration revisits most recently updated rows prev0 = row0 prev1 = row1 # ** Wrap-up phase: output computation # Absorbs a final column with a full-round sponge H.absorb( M[row0][0] ) # Squeezes outlen bits with a full-round sponge output = H.squeeze(outlen) # Provides outlen-long bitstring as output return output # *** Algorithm with parallelism *** for each i in [0,P]: # ** Bootstrapping phase: Initializes the sponge's state and local variables # Byte representation of input parameters (others can be added) params = outlen || len(password) || len(salt) || t_cost || m_cost || C || P || i # Initializes the sponge's state (after that, password can be overwritten) H_i.absorb( pad(password || salt || params) ) # Initializes visitation step, window and first rows that will feed gap = 1 stp = 1 wnd = 2 sqrt = 2 sync = 4 j = i prev0 = 2 rowP = 1 prevP = 0 # ** Setup phase: Initializes a (m_cost x C) memory matrix, it's cells having blen-byte cells # Initializes M_i[0], M_i[1] and M_i[2] for col = 0 to C-1 M_i[0][C-1-col] = H_i.squeeze_{rho}(blen) for col = 0 to C-1 M_i[1][C-1-col] = H_i.duplexing_{rho}( M_i[0][col], blen) for col = 0 to C-1 M_i[2][C-1-col] = H_i.duplexing_{rho}( M_i[1][col], blen) # Filling Loop: initializes remainder rows for row0 = 3 to ( (m_cost / P) - 1 ) # Columns Loop: M_i[row0] is initialized and M_j[row1] is updated for col = 0 to C-1 rand = H_i.duplexing_{rho}( M_j[rowP][col] [+] M_i[prev0][col] [+] M_j[prevP][col], blen) M_i[row0][C-1-col] = M_i[prev0][col] ^ rand M_j[rowP][col] = M_j[rowP][col] ^ ( rand >>> omega ) # Rows to be revisited in next loop prev0 = row0 prevP = rowP rowP = (rowP + stp) % wnd # Window fully revisited if (rowP = 0) # Doubles window and adjusts step wnd = 2 * wnd stp = sqrt + gap gap = -gap # Doubles sqrt every other iteration if (gap = -1) sqrt = 2 * sqrt # Synchronize point if (row0 = sync) sync = sync + (sqrt / 2) j = (j + 1) % P syncThreads() syncThreads() # ** Wandering phase: Iteratively overwrites pseudorandom cells of the memory matrix wnd = m_cost / (2 * P) sync = sqrt off0 = 0 offP = wnd # Visitation Loop: (2 * m_cost * t_cost / P) rows revisited in pseudorandom fashion for wCount = 0 to ( ( (m_cost * t_cost) / P) - 1) # Picks pseudorandom rows and slices (j) row0 = off0 + (lsw(rand) % wnd) rowP = offP + (lsw( rand >>> omega ) % wnd) j = lsw( ( rand >>> omega ) >>> omega ) % P # Columns Loop: update M_i[row0] for col = 0 to C-1 # Picks pseudorandom column col0 = lsw( ( ( rand >>> omega ) >>> omega ) >>> omega ) % C rand = H_i.duplexing_{rho}( M_i[row0][col] [+] M_i[prev0][col0] [+] M_j[rowP][col], blen) M_i[row0][col] = M_i[row0][col] ^ rand # Next iteration revisits most recently updated rows prev0 = row0 # Synchronize point if (wCount = sync) sync = sync + sqrt swap(off0,offP) syncThreads() syncThreads() # ** Wrap-up phase: output computation # Absorbs a final column with a full-round sponge H_i.absorb( M_i[row0][0] ) # Squeezes outlen bits with a full-round sponge output_i = H_i.squeeze(outlen) # Provides outlen-long bitstring as output return output_0 ^ ... ^ output_{P-1} Security analysis

Against Lyra2, the processing cost of attacks using  of the amount of memory employed by a legitimate user is expected to be between

of the amount of memory employed by a legitimate user is expected to be between  and

and  , the latter being a better estimate for

, the latter being a better estimate for  , instead of the

, instead of the  achieved when the amount of memory is

achieved when the amount of memory is  , where

, where  is a user-defined parameter to define a processing time.

is a user-defined parameter to define a processing time.

This compares well to scrypt, which displays a cost of  when the memory usage is

when the memory usage is  , and with other solutions in the literature, for which the best result is

, and with other solutions in the literature, for which the best result is  .

.

Nonetheless, in practice these solutions usually involve a value of  (memory usage) lower than those attained with the Lyra2 for the same processing time.

(memory usage) lower than those attained with the Lyra2 for the same processing time.

Performance

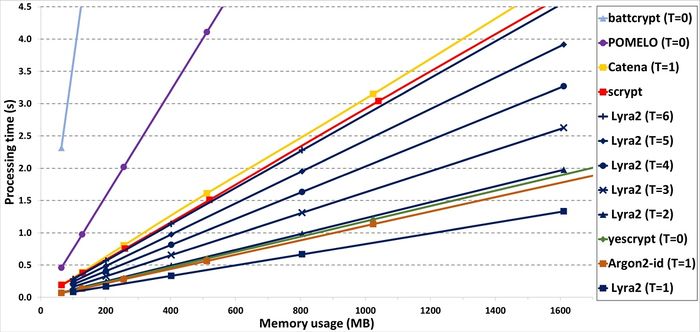

The processing time obtained with a SSE single-core implementation of Lyra2 are illustrated in the figure besides.

This figure was extracted from, and is very similar of third-party benchmarks performed during the PHC context.

The results depicted correspond to the average execution time of Lyra2 configured with  ,

,  ,

,  bits (i.e., the inner state has 256 bits), and different

bits (i.e., the inner state has 256 bits), and different  and

and  settings, giving an overall idea of possible combinations of parameters and the corresponding usage of resources.

settings, giving an overall idea of possible combinations of parameters and the corresponding usage of resources.

As shown in this figure, Lyra2 is able to execute in: less than 1 s while using up to 400 MB (with  and

and  ) or up to 1 GB of memory (with

) or up to 1 GB of memory (with  and

and  ); or in less than 5 s with 1.6 GB (with

); or in less than 5 s with 1.6 GB (with  and

and  ).

).

All tests were performed on an Intel Xeon E5-2430 (2.20 GHz with 12 Cores, 64 bits) equipped with 48 GB of DRAM, running Ubuntu 14.04 LTS 64 bits, and the source code was compiled using gcc (GNU Compiler Collection) 4.9.2.